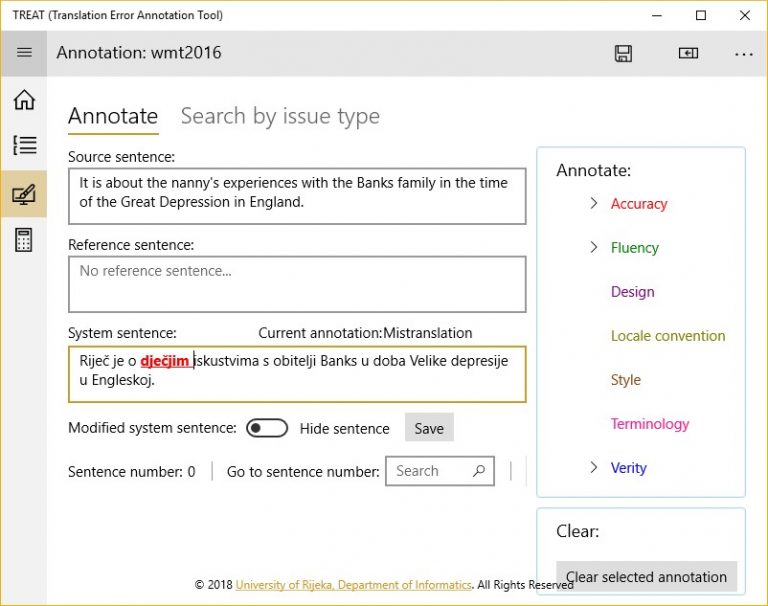

The aim of this research paper is to conduct a thorough analysis of inter-annotator agreement in the process of error analysis, which is well-known for its subjectivity and low level of agreement. Since the process is tiresome in its nature and the available user interfaces are pretty distinct from what the average annotator is accustomed to, a user-friendly Windows 10 application offering a more attractive user interface is developed with the aim to simplify the process of error analysis. Translations are performed with Google Translate engine and English-Croatian is selected as the language pair. Since there has been a lot of dispute on inter-annotator agreement and the need for guidelines has been often been emphasized as crucial, the annotators are given a very detailed introduction into the process of error analysis itself. They are given a presentation with a list of the MQM guidelines enriched with tricky cases. All annotators are native speakers of Croatian as the target language and have a linguistic background. The results demonstrate that a stronger agreement indicates more similar backgrounds and that the task of selecting annotators should be conducted more carefully. Furthermore, a training phase on a similar test set is deemed necessary in order to gain a stronger agreement.