We introduce Pyramidal Recursive learning (PyRv), a novel method for text representation

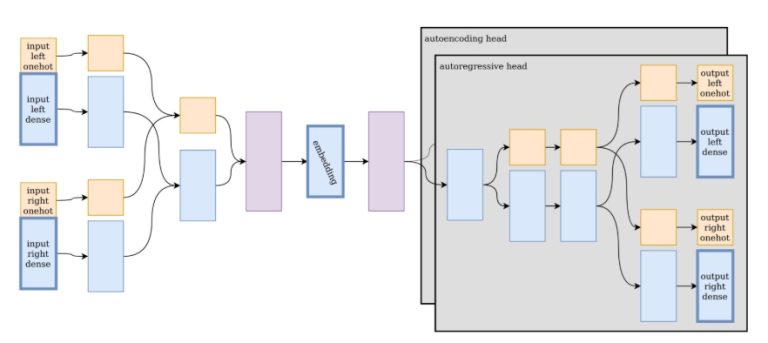

learning. This approach constructs a pyramidal hierarchy by recursively building representations of phrases, starting from tokens (characters, subwords, or words). At each level, N representations are recursively combined, resulting in N-1 representations on the level above, abstracting the input text from characters or subwords to words, phrases, and potentially sentences. The proposed method employs two learning approaches: autoencoding and autoregression. The autoencoding head decodes encoded representation pairs, while the autoregressive head predicts neighboring representations on both the left and right. This method exhibits four key properties: hierarchical representation, representation compositionality, representation decodability, and self-supervised learning. To implement and validate the proposed method, we train the Pyramidal Recursive Neural Network (PyRvNN) model. Evaluation metrics include autoencoder decodability, plagiarism detection, memorization, and readability. The accuracy of autoencoder decodability serves as an indicator of the validity of the four key properties. Preliminary assessments demonstrate promising results, particularly in machine-paraphrased plagiarism, text readability, and a memorization experiment.