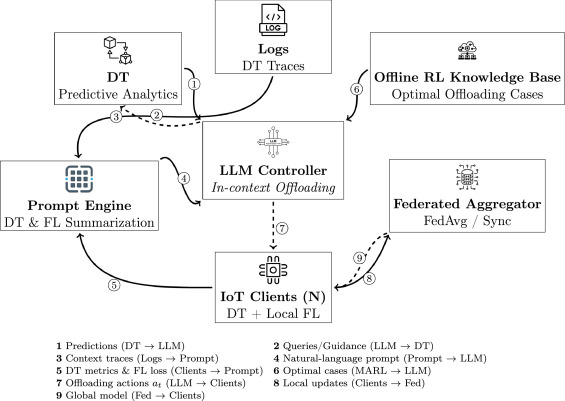

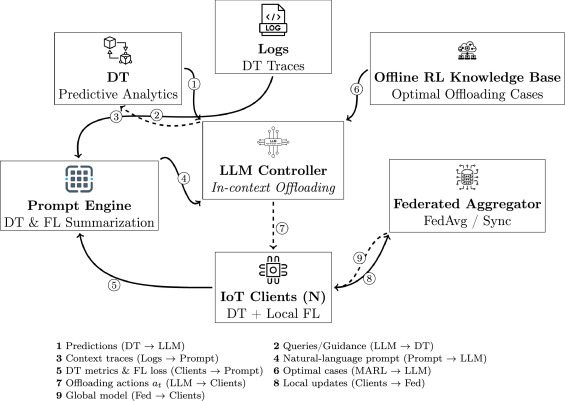

This study considers the combination of Digital Twins (DT), Federated Learning (FL), and computation offloading to establish a context-aware framework for effective resource management in IoT networks. Although DT models can predict battery levels, CPU usage, and network delays to aid reinforcement learning (RL) agents, earlier RL-based controllers require significant training and are slow to adapt to changes. To overcome this issue, we propose a Large Language Model (LLM)-assisted offloading method that transforms real-time DT predictions and selected historical Explainable DT with Federated Multi-Agent RL (MARL) examples into structured natural-language prompts. Through in-context learning, LLM deduces offloading tactics without retraining, while FL aligns global convergence metrics to optimize the balance between inference accuracy and energy efficiency. Simulation studies conducted for baseline and unstable wireless network scenarios reveal that the LLM controller persistently maintains near-optimal latency and reduces energy use. In a baseline scenario with devices, the LLM achieves an average latency of 252 ms, which is only 5 % higher than edge offloading, while cutting energy consumption by around 20 %. Under unstable wireless conditions, it achieves an average latency of 276 ms with energy use of 0.122 J, as opposed to 0.154 J for edge offloading. These findings validate that LLM-based decision making facilitates scalable, adaptive, and energy-efficient task scheduling, presenting a viable alternative to RL controllers in DT-enabled federated IoT systems.