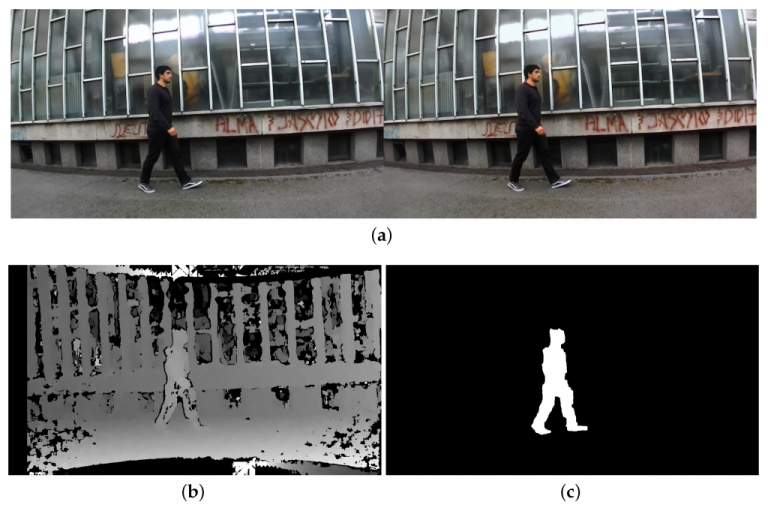

Each individual describes unique patterns during their gait cycles. This information canbe extracted from the live video stream and used for subject identification. In appearance basedrecognition methods, this is done by tracking silhouettes of persons across gait cycles. In recentyears, there has been a profusion of sensors that in addition to RGB video images also provide depthdata in real-time. When such sensors are used for gait recognition, existing RGB appearance basedmethods can be extended to get a substantial gain in recognition accuracy. In this paper, this isaccomplished using information fusion techniques that combine features from extracted silhouettes, used in traditional appearance based methods, and the height feature that can now be estimated usingdepth data. The latter is estimated during the silhouette extraction step with minimal additionalcomputational cost. Two approaches are proposed that can be implemented easily as an extension toexisting appearance based methods. An extensive experimental evaluation was performed to provideinsights into how much the recognition accuracy can be improved. The results are presented anddiscussed considering different types of subjects and populations of different height distributions.