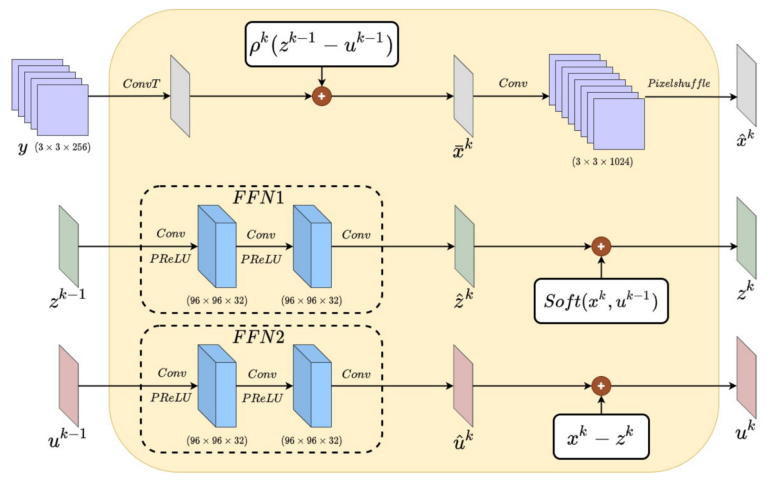

Deep learning has demonstrated exceptional learning capabilities, leading to the development various deep unfolding networks for image reconstruction. However, current deep unfolding networks often replace certain steps of traditional optimization algorithms with neural networks, thereby compromising the interpretability of the optimization algorithms. Additionally, each iteration in the unfolding process may result in certain image information loss, negatively impacting image reconstruction quality. This paper proposes a deep unfolding Alternating Direction Method of Multipliers (ADMM) network named LSRA-CSNet for compressive sensing image reconstruction, incorporating a long-short term residual optimization mechanism. The LSRA-CSNet is constructed by stacking multiple stages, with each stage consisting of a Fast ADMM Block (FAB) and a Residual Optimization Block (ROB). In FAB, inspired by the Woodbury matrix identity, we propose a fast version of the ADMM algorithm. Meanwhile, instead of replacing certain steps of the ADMM with neural networks, we leverage CNNs to replace some matrix operations. ROB consists of the Short-Term Residual Refinement Module (SRRM) and the Long-Term Residual Feedback Module (LRFM), which optimize the reconstruction details by leveraging inter-stage image residuals and multi-stage measurement residuals, respectively. Experiments on four datasets show the effectiveness of LSRA-CSNet, demonstrating superior reconstruction accuracy compared to existing CS image reconstruction networks.